JC-AI Newsletter #10

- November 26, 2025

- 8993 Unique Views

- 5 min read

Fourteen days have passed, and it is time to present a fresh collection of readings that could influence developments in the field of artificial intelligence.

This newsletter focuses on examining how agentic AI systems improve accuracy, tutorials on agentic system architecture, and importnat security challenges arising from increased not only from agentic AI systems adoption. This edition of the AI newsletter includes compelling discussions and interviews about the future of AI and approaches.

article: Introducing SWE-grep and SWE-grep-mini: RL for Multi-Turn, Fast Context Retrieval

authors: Ben Pan, Carlo Baronio, Albert Tam, Pietro Marsella and others

date: 2025-10-16

desc.: Modern coding agents face a fundamental trade-off between speed and intelligence. The article presents SWE-grep and SWE-grep-mini, trained fast agentic models specialized in highly parallel context retrieval. These models match the retrieval capabilities of frontier coding models while requiring an order of magnitude less time.

category: research

article: Nemotron Elastic: Towards Efficient Many-in-One Reasoning LLMs

authors: Ali Taghibakhshi, Sharath Turuvekere Sreenivas, Saurav Muralidharan, Ruisi Cai, Marcin Chochowski and others

date: 2025-11-20

desc.: Training a family of large language models targeting multiple scales and deployment objectives is prohibitively expensive. Recent work on model compression through pruning and knowledge distillation has reduced this cost, but still requires substantial computational resources, increasing costs per compressed model. This paper presents Nemotron Elastic, the first elastic training framework for reasoning-capable LLMs. While the Nemotron Elastic framework achieves good results, it still has potential for future research. (NVIDIA)

category: research

article: Cognitive Foundations for Reasoning and Their Manifestation in LLMs

authors: Priyanka Kargupta, Shuyue Stella Li, Haocheng Wang, Jinu Lee and others

date: 2025-11-20

desc.: Large language models successfully solve complex problems yet fail on simpler variants, suggesting they achieve correct outputs through mechanisms fundamentally different from human reasoning. A meta-analysis of 1,598 LLM reasoning papers reveals that the research community concentrates on easily quantifiable behaviors while neglecting meta-cognitive controls. The paper documents systematic structural differences and proposes connecting cognitive science with research on model capabilities rather than pursuing various shortcuts.However, the presented results leave unclear whether the proposed guidance enables genuine deployment of latent capabilities or simply helps models retrieve cached reasoning patterns from training data.

category: research

article: Incorporating Self-Rewriting into Large Language Model Reasoning Reinforcement

authors: Jiashu Yao, Heyan Huang, Shuang Zeng, Chuwei Luo and others

date: 2025-11-20

desc.: Rather than traditional approaches that reward reasoning processes through reinforcement learning which can lead to issues such as over-thinking, focus on irrelevant aspects and etc., the paper presents a Self-Rewriting approach in which a model rewrites its own reasoning text and subsequently learns from the rewritten reasoning to improve its internal thought process quality. The results report improved accuracy of +0.6 alongside 46% shorter reasoning sequences. The article discusses the achieved results and related challenges, including trade-offs compared to standard approaches.

category: research

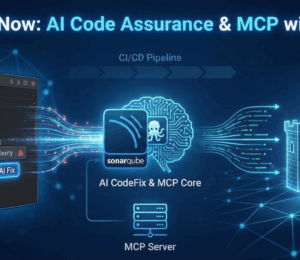

article: Hiding in the AI Traffic: Abusing MCP for LLM-Powered Agentic Red Teaming

authors: Strahinja Janjuesvic, Anna Baron Garcia, Sohrob Kazerounian

date: 2025-11-20

desc.: Today's 'Vibe Coding' approach enables developers to generate code without fully understanding its mechanics, including the orchestration of multi-agent swarms and sophisticated detection evasion strategies. While existing frameworks may use LLMs to issue post-exploitation commands, they often rely on traditional channels. The paper proposes an innovative Command & Control (C2) architecture leveraging the Model Context Protocol (MCP) for coordinating autonomous red teams of agents while addressing stealth and evasion aspects in depth. The article discusses differences between theoretical attack vectors and enterprise environments. Although the approach shows noticeable improvements, it comes with multiple unanswered questions for future research (MIT, Antropic).

category: research

article: JudgeBoard: Benchmarking and Enhancing Small Language Models for Reasoning Evaluation

authors: Zhenyu Bi, Gaurav Srivastava, Yang Li, Meng Lu, Swastik Roy and others

date: 2025-11-20

desc.: Although SLMs' ability to judge answers remains underexplored, recent studies show that small language models (SLMs) can perform competitively on reasoning tasks with appropriate prompting or fine-tuning. This paper proposes JudgeBoard, an evaluation pipeline capable of injecting SLMs to improve answer comparisons. Due to the limitations of SLMs, the paper introduces the Multi-Agent Judging (MAJ) framework, which outperforms standard approaches (Chain-of-Thought, etc.) by approximately 2% in accuracy. The paper reveals a significant performance gap in judging capability between SLMs and LLMs while highlighting the importance of multi-stage judging (Amazon).

category: research

article: Multi-Agent LLM Orchestration Achieves Deterministic, High-Quality Decision Support for Incident Response

authors: Philip Drammeh

date: 2025-11-19

desc.: Through multiple trials using a reproducible framework, the paper demonstrates that multi-agent orchestration fundamentally transforms LLM-based incident response quality compared to single-agent, error-prone solutions. The multi-agent response is treated as deterministic while introducing latency, however, speed is not the primary goal, provided it remains within acceptable thresholds. Despite the strong performance of multi-agent systems, multiple challenges remain, including LLM deadlocks, fine-tuning requirements, and latency constraints.

category: research

article: Hierarchical Token Prepending: Enhancing Information Flow in Decoder-based LLM Embeddings

authors: Xueying Ding, Xingyue Huang, Mingxuan Ju, Liam Collins and others

date: 2025-11-18

desc.: The paper proposes Hierarchical Token Prepending (HTP) to improve causal attention mechanisms by mitigating attention-level compression and introducing mean-pooling, enabling backward information flow that is critical for generating high-quality embeddings. HTP achieves consistent performance, especially in long-context settings. The article addresses future research directions.

category: research

article: Stanford AI Club: Jeff Dean on Important AI Trends

authors: Stanford AI Club

date: 2025-11-24

desc.: Jeff Dean is one of the most influential computer scientists of the modern computing era, best known as Google’s Chief Scientist and a co-founder of Google Brain. His work has shaped the foundations of large-scale distributed systems and modern machine learning—spanning breakthroughs in search infrastructure, deep learning frameworks like TensorFlow, and today’s frontier AI research. The video provides a timeline of basic technologies and approaches currently employed in the AI-LLM field.

category: youtube

article: Elon Musk Makes Shocking Future Predictions At U.S.-Saudi Arabia Forum Alongside Jensen Huang

authors: Forbes Breaking News

date: 2025-11-20

desc.: Elon Musk and Jensen Huang discuss technology at the U.S.-Saudi Arabia Investment Forum in Washington, D.C., offering an interesting perspective on the future. The interview presents a vision free from current societal constraints and structures such as money-based decisions, resource requirements, sustainability of technologies, or long-term impacts that may limit future evolution. The interview does not address crucial contemporary debates.

category: youtube, interview

article: AI Kill Switch for malicious web-based LLM agent

authors: Sechan Lee, Sangdon Park

date: 2025-09-26

desc.: While AI agents improve the ability to handle complex tasks, they simultaneously amplify the risks of malicious misuse, such as unauthorized collection of personally identifiable information (PII). The paper proposes an "AI Kill Switch" technique aimed at immediately identifying and stopping such malicious AI agent behavior. The key idea lies in identifying an effective defense prompt, which shows similarities to the "LLM as a judge" approach, and focuses on "Prompt Injection" and "Jailbreak-based prompt" forms of attacks. The paper discusses limitations such as the absence of real-world test cases and additional challenges.

category: research

article: BrowseSafe: Understanding and Preventing Prompt Injection Within AI Browser Agents

authors: Kaiyuan Zhang, Mark Tenenholtz, Kyle Polley and others

date: 2025-11-25

desc.: The integration of artificial intelligence (AI) agents into web browsers introduces security challenges beyond traditional web application threat models. The paper discusses identified attack vectors, such as prompt injection, and their impact within real-world environments, noting the low level of current understanding. The paper proposes a novel benchmark and multi-layer defense mechanism called BrowseSafe. Although the paper presents improvements, the complexity of prompt injection attacks remains an open investigation topic (Perplexity AI).

category: research

Don’t Forget to Share This Post!

Comments (0)

No comments yet. Be the first.