JC-AI Newsletter #3

- August 19, 2025

- 3428 Unique Views

- 3 min read

The first and second newsletters introduced a 14-day cadence, and even though it is the holiday season for many of us, we are sticking to the promised period.

The current newsletter vol.3, brings a collection of valuable articles focusing on challenges that are commonly reported through reported breakthroughs in the field of artificial intelligence. Although some articles may remain open or contain suggestions for future research due to the intensive development and utilization efforts of agentic AI systems, these articles can be considered valuable to the community, increasing awareness and understanding of ongoing challenges.

The world influenced by LLM is changing very quickly, let's start...

article: Searching for Efficient Transformers for Language Modeling

authors: David R. So, Wojciech Ma´nke, Hanxiao Liu, Zihang Dai, Noam Shazeer, Quoc V. Le

Google Research, Brain Team

date: 2022-01-24

desc.:It has been reported that transformer training consumes a huge amount of energy. Transformers play a key role in current AI-LLM systems, including OpenAI ChatGPT-3. The article describes efforts to reduce environmental impact by finding new ways to conduct transformer training more efficiently. The tests were mainly focused only on Decoder-only models. The result did not come in the expected hope of improving efficiency.

article: Carbon Emissions and Large Neural Network Training

authors: David Patterson, Joseph Gonzalez , Quoc Le , Chen Liang, Lluis-Miquel Munguia,

Daniel Rothchild, David So, Maud Texier , and Jeff Dean

date: 2021-04-23

desc.: The article describes an effort to create comparison between several Large Language Models: T5, Meena, GShard, Switch Transformer and ChatGPT-3 with the goal to refine estimates for Evolved Transformed approach. The article reflects on the benefits of carbon-free energy sources and provides suggestions on how to improve and limit the CO2 footprints. The research article used mostly programming languages like Python and JavaScript.

article: Language Models are Few-Shot Learners

authors: Tom B. Brown, Ilya Sutskever, Dario Amodei, Scott Gray, Benjamin Chess ,Jack Clark, Christopher Berner and others

date: 2020-01-06

desc.: The article focuses on improving LLM models by increasing the number of parameters. At the same time, the research reports a number of harmful manifestations of the behavior of such models. Despite the increasing quality of generated text and responses from LLM-based systems, existing social barriers may be lowered. The models can perform socially harmful activities, including spam, phishing, abuse of legal and government processes, fraudulent academic essay writing, and other pre-texting practices. The article suggests future research in this area.

article: Do we understand the value of AI knowledge ?

authors: Miro Wengner

date: 2025-08-12

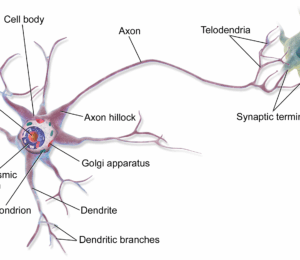

desc.: The article discusses the value of information generated by LLM systems. The article evaluates and compares the use of neural networks in the field of mechanical systems control. The potential results are compared with the current achievements of LLM systems. At the same time, the article attempts to evaluate the potential challenges associated with the acquisition of knowledge or education using such systems and their impact. The conclusions include the question of whether the basic rules of logic and Boolean algebra still remain valid in such a process.

article: Programming by Backprop: LLMs Acquire Reusable Algorithmic Abstractions During Code Training

authors: Jonathan Cook, Silvia Sapora, Arash Ahmadian, Akbir Khan, Tim Rocktäschel and others

date: 2025-07-23

desc.: The effort of the article is to distinguish whether LLM models internalise algorithmic abstraction reusable forms (patterns) across the generated code or do LLM simply offer hidden probabilistic evaluation forms of input data. Although this question remains unanswered the article reports a positive improvement by employing backpropagation algorithms. Some prior works to this article illuminates critical failures in the algorithmic reasoning of language models (LMs) as the LMs may use heuristics to solve arithmetic problems, rather than doing so algorithmically. Research article demonstrates how training on code may lead to improved general-purpose reasoning while suggesting a future research.

article: On the Danger of Stochastic Parrots: Can Language Models Be Too Big ?

authors: Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, Shmargaret Shmitchell

date: 2021-03-01

desc.: The article explores how highly parameterized language models increase users' tendency to identify meaning where none exists, as a result of generating meaningful text output of LLMs. This may lead to the general acceptance of synthetic texts as valuable. Although the focus of this article is on examining racist, sexist, ableist, extremist, or other patterns in training datasets, the implications of LLMs’ capabilities can extend to other industry sectors.

Enjoy reading and look forward to the next one!

Don’t Forget to Share This Post!

Comments (0)

No comments yet. Be the first.