Agents Meet Databases: The Future of Agentic Architectures

- October 02, 2025

- 2354 Unique Views

- 6 min read

- Accuracy: Ensure Reliable Query Generation

- Security: Maintain Protection and Guardrails

- Performance: Manage Unpredictable Agentic Workloads

With 2025 hailed as "the year of agents" by NVIDIA CEO Jensen Huang and OpenAI CPO Kevin Weil, AI agents are increasingly of interest to organizations across industries. These autonomous systems will often need to interact with databases, where much of the world’s valuable data resides. According to IDC’s Data Age 2025 report, enterprises will manage nearly 60% of the world’s data by 2025, most of it organized in databases. As a result, databases will be central to agentic architectures, and the success of agent deployments will depend on how well they connect and interact with them.

Enter the Model Context Protocol (MCP), originally developed by Anthropic. MCP has quickly become popular as a standardized method for connecting tools and data to agentic systems, offering a new approach to agent-database interoperability. But this raises key questions for AI developers: What do agentic architectures involving databases actually look like? And what should you consider when building one?

A Quick Overview of Agents

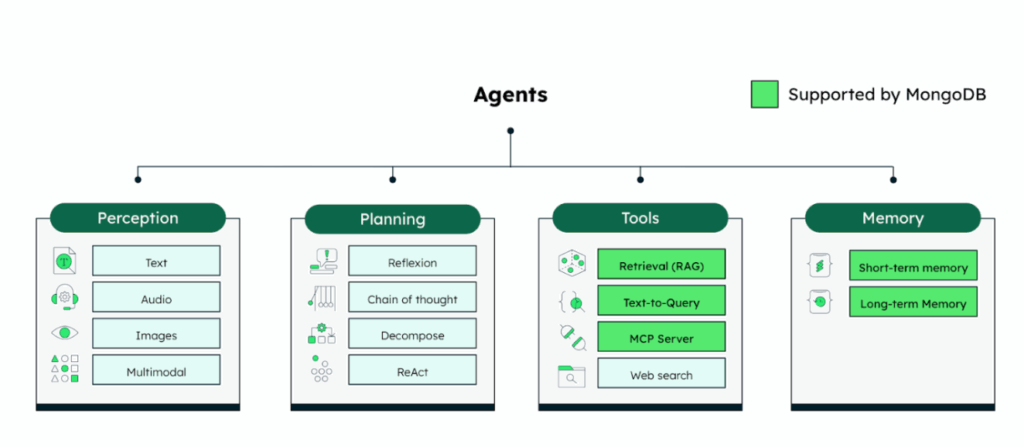

Agents are LLM-based systems that have access to tools: functionalities or resources they can use to perform tasks beyond their native capabilities. What defines them is the ability to autonomously decide when and how to use these tools, whether independently, within a structured workflow, or with a human in the loop.

Figure 1. Core components of an agent: perception, planning, tools, and memory.

Granting agents the ability to directly query and interact with database data enables a range of powerful use cases. Examples include generating application code based on available collections and schemas, or retrieving the latest customer information to resolve support issues.

This introduces several architectural design decisions to ensure performance, scalability, and security. In particular, how database querying tools are exposed plays a critical role. When providing database querying capabilities to AI agents, two main paths emerge: leveraging standardized tools with MCP or building a custom integration tailored to specific needs.

Path 1: Standardized Integration with MCP servers

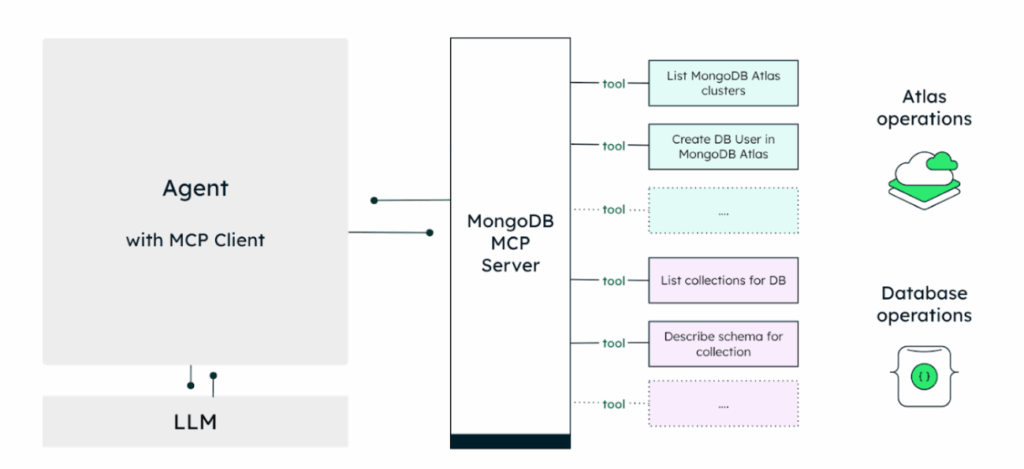

MCP servers offer a plug-and-play approach to integrating agents with databases. As someone working at MongoDB, I may be biased, but the MongoDB MCP Server is a great example. It simplifies connecting to a MongoDB database and querying data through MCP, making it easy for various agent-based assistants like Cursor, Windsurf, Claude Desktop, or any MCP-enabled agentic system to interact with your data.

MCP provides agents with a standardized interface for database interaction. These servers can be deployed locally or remotely. A remote server allows multiple clients to access the same instance, making it ideal for shared environments. Your choice between local and remote deployment depends on your performance, scalability, and security needs. Remote deployments, in particular, may require additional considerations such as authentication mechanisms to ensure secure access.

Figure 2. Architecture diagram showing an agent interfacing with the MongoDB MCP Server.

The upsides of this approach are:

- Shift in ownership: Leveraging an officially supported server, like the one from MongoDB, means the provider handles ongoing support and updates, ensuring agents have access to continuously improved capabilities without manual code updates from the teams building and deploying those agents.

- Plugin-like integration: Provides an out-of-the-box solution, making it the fastest way for teams to prototype or deploy production-grade agents that interact with a database, similar to a plugin system.

- Ideal for operational database usage: This approach is well-suited for the most common database interactions, such as basic CRUD-style querying and database management operations.

The primary tradeoff with using pre-built MCP servers is limited customization; compared to building a custom integration, you have less control over precisely how the tools behave under the hood. Although MCP simplifies integrating predefined tools with agents, it still requires careful consideration, as MCP isn't inherently secure (more about this later).

Path 2: Custom Integrations for Control and Flexibility

Building a custom implementation offers a more flexible route for teams requiring more fine-grained control over their database interactions. Frameworks like LangChain simplify and accelerate this process. For instance, the MongoDB-LangChain integration package provides tools for implementing natural language queries, allowing developers to build AI applications and agents that interact with MongoDB. This enables intuitive interfaces for data exploration and autonomous agents, such as customer support assistants, to retrieve data. This toolkit is customizable and extensible. Developers building agents can precisely define which database operations are exposed to the agent, including schema inspection, query generation, validation, or more complex scenarios, and specifically design how those tools are called.

The main advantages of this approach are:

- Full control and ownership: You keep complete control over tool behavior and the exact database operations your agent can perform.

- Support for advanced use cases: This approach allows support for advanced and domain-specific use cases that standard pre-built tools might not cover.

- Custom optimization: Custom implementations can be tightly aligned with internal data policies and specific business logic, hence being optimized for your requirements.

However, custom development typically comes with tradeoffs like higher development overhead and full ownership responsibility for the integration. This path is ideal for teams building agents tailored to unique workflows, where agents are the core product, or where compliance, privacy, or performance requirements exceed what standard solutions can support.

Accuracy, Security, and Performance Considerations

Granting agents direct database access, whether through MCP or custom tools, introduces significant challenges related to accuracy, security, and performance. As these technologies evolve, implementing preventive measures and adhering to best practices is critical for reliable and scalable agentic operations.

Accuracy: Ensure Reliable Query Generation

Query accuracy heavily depends on the LLM’s capabilities and the quality of the provided schema or data samples. Ambiguous or incomplete metadata inevitably results in incorrect or suboptimal queries. When implementing agentic text-to-query systems, it’s important to enforce input/output validation, implement rigorous testing, and establish guardrails or human review for complex, sensitive operations.

To generate accurate MongoDB queries, you can leverage the following resources and tools:

- MongoDB’s natural language query best practices and official guidelines to improve query precision and reliability.

- The MongoDB MCP Server allows developers to build tools and agentic systems that interact with MongoDB, supporting both administrative tasks and core data operations like querying and indexing.

- The MongoDB-LangChain integration offers ready-to-use tools for natural language queries, including collection listing, schema retrieval, sample data access, and pre-execution query validation.

Security: Maintain Protection and Guardrails

Direct database access by AI agents creates unprecedented privacy and data governance challenges. MCP, in particular, presents new security threats, such as prompt injection and tool poisoning, due to LLMs’ inherent behavior. While relatively new, MCP-related threats and risk mitigations have been researched and documented by multiple organizations such as RedHat and Writer. Strict guardrails should be enforced to mitigate risks of malicious activity and sensitive data exfiltration.

As a general best practice, agents must operate under strict least-privilege principles, using roles and policies that grant only permissions for their specific tasks.

Another critical concern involves sensitive information sharing with LLM providers when agents access data. Organizations need architectural controls over what information (database names, collection names, data samples) reaches the LLM, with the ability to disable this entirely.

To address these security concerns, a layered access control model is essential.

- Upstream: fine-grained role-based access ensures agents interact only with authorized services and data, in line with the principle of least privilege. For example, MongoDB Atlas enables precise control over access scope (e.g., specific services or databases) and access type (read-only vs. read-write).

- Downstream: additional restrictions can be applied to limit functionality or enforce read-only access. For instance, the MongoDB MCP Server can enforce a read-only mode that fully disables write and update operations. Together, these controls reduce risk and ensure proper governance over agent-database interactions.

Performance: Manage Unpredictable Agentic Workloads

The non-deterministic nature of LLMs makes agent workload patterns inherently unpredictable. Agents can frequently interact with the database which can severely impact performance, creating a key operational challenge. In this context, choosing a database that preserves its primary role while allowing agents to scale efficiently is critical.

A recommended approach for agentic workloads is to isolate them from other database operations. This type of isolation offers two key benefits: first, it ensures that only designated instances handle agent workloads, preserving production performance while enabling flexible agent scalability. Second, it allows for tailored configurations on these instances, such as setting them to read-only mode, to optimize for specific use cases. With MongoDB, this translates to using replica sets, which support independent scaling of read and write operations. In addition, autoscaling, along with dedicated, optimized search nodes, further enhances agent performance for search-intensive tasks. Combining workload isolation with autoscaling is critical for deploying reliable and scalable agents.

The Agentic Future Depends on Databases

As AI agents continue to evolve into powerful, autonomous systems, their ability to interact directly with enterprise data becomes essential. With databases housing the majority of the world’s information, enabling access is no longer optional. The MCP offers a standardized, fast path to agent-database integration, ideal for common use cases. For deeper customization, building bespoke integrations provides granular control and extensibility.

Regardless of the path chosen, developers must prioritize accuracy, enforce strict security controls, and ensure scalability. In this new era of agents, the real competitive edge lies in choosing a modern and flexible database that fits these requirements.

Don’t Forget to Share This Post!

Comments (0)

No comments yet. Be the first.