How Deep Netts and Java AI Transformed Particle Physics at US DoE, Jefferson Lab

- May 19, 2025

- 8323 Unique Views

- 2 min read

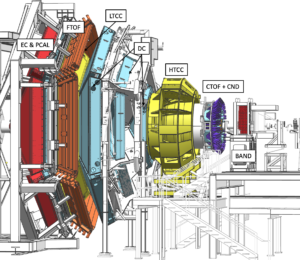

At the intersection of nuclear physics and artificial intelligence, Jefferson Lab is leveraging Java-based AI to overcome one of the most computationally intense challenges in modern science: reconstructing particle trajectories from high-frequency electron scattering experiments.

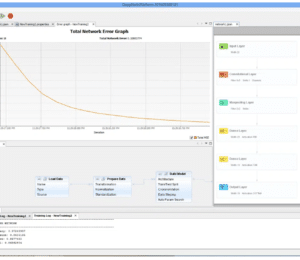

Each second, over 16,000 interactions are recorded as particles pass through a complex array of drift chambers. Traditional reconstruction methods—based on mathematical likelihood calculations—must sift through thousands of signal combinations to identify valid particle tracks. The result? Up to 300 milliseconds of processing per event on a single core, leading to massive compute demands.

To address this, Jefferson Lab turned to Deep Netts, a Java-based deep learning library that significantly accelerated their workflow. Instead of calculating magnetic fields and testing every possible signal combination, Deep Netts enables AI to identify valid particle tracks in milliseconds. This effectively filters out noise and narrows billions of interactions down to a manageable set for high-precision physics analysis.

AI helps us bypass the brute-force mathematics,” explains Gagik, the lead developer. “We train the model to distinguish valid from invalid signal combinations. This eliminates the need for complex simulations in every step.

Why Java for AI?

While Python initially served as an entry point into machine learning, Jefferson Lab needed seamless integration with their existing Java-based infrastructure. The choice of Java wasn’t arbitrary—it provided essential portability, type safety, and scalability across their distributed computing environment, consisting of thousands of multi-core nodes with varying configurations.

When you have to deploy across Red Hat 7, Alma 9, and everything in between, Python becomes a dependency nightmare,” Gagik noted. “Java lets us package everything in a single JAR file. It just works.

This ability to run in batch mode, integrate with distributed systems, and maintain consistent performance regardless of hardware or OS version was critical. Deep Netts' design allowed for smooth training, validation, and deployment in Jefferson Lab’s private cloud—without reliance on commercial providers like AWS.

Cost Reduction & Efficiency Gains

Jefferson Lab operates on tight computational timelines, with multi-month pipelines for raw data reconstruction, production, and filtering. Introducing Deep Netts has drastically shortened the time to filter useful events, helping researchers prioritize and refine experiments faster.

Using AI, we can filter billions of events down to 1,000 promising candidates in a single hour,” Gagik emphasized. “It’s not just about speed—it’s about focusing our computational power where it matters.

Moreover, unlike large-scale transformer models with slow inference times, Deep Netts enabled the use of compact, efficient architectures optimized for high-frequency workloads. This not only improved throughput but reduced infrastructure strain and costs.

Conclusion

Deep Netts and Java gave Jefferson Lab a powerful, scalable, and cost-effective way to implement AI in one of the most data-heavy scientific domains. By bringing AI to Java-based distributed systems, the lab has set a precedent for high-performance scientific computing without abandoning platform stability or increasing operational complexity.

This case proves that AI in Java is not just viable—it’s production-ready and transformative.

Don’t Forget to Share This Post!

Comments (0)

No comments yet. Be the first.